Briefly however, the new deployment model involves deployment to a specially constructed SQL DB otherwise known as 'SSISDB' (at least in this CTP) which appears under its own node in SSMS - with its own GUI options. The SSISDB database is the SSIS 'Catalog' and includes the concepts of environments and parameters to allow run-time configuration of packages. Therefore SSIS is now 'environment aware' and there is no need to have to store configuration locations outside of SSIS in Windows environment variables and so on - which was always a bit awkward. Parameters can now be created on a project level removing the need to create Parent package configurations. All of this can be administered by using views and stored procedures, the API or the aforementioned SSMS.

However the one thing I was left wondering was: 'how do I execute a package under the new configuration model without using the SSMS GUI?'. DTExec still exists but does not appear to have options to use the new environment variables (at least in the current CTP). Happily perusal of the new MSDN documentation reveals the answer.

Firstly create and environment called 'env1', deploy an SSIS project called 'Project' with a package called 'Package1' to a folder called 'Test'. See Jamie's blog to set these up. Then do the following. First of all you need to retrieve the appropriate reference id for the project and environment:

Then we need to tell the SSIS Catalog that we want to execute the package with the particular reference:

DECLARE @execution_id BIGINT

DECLARE @reference_id BIGINT

SELECT @reference_id = e.reference_id

FROM catalog.project_environments e

INNER JOIN catalog.projects p

ON e.project_id = p.project_id

WHERE e.environment_name = 'env1'

AND p.name = 'Project'

EXECUTE catalog.create_execution

@folder_name = 'Test',

@project_name = 'Project',

@package_name = 'Package1.dtsx',

@reference_id = @reference_id,

@execution_id = @execution_id OUTPUT

Finally tell SSIS to execute using the execution id created above:

EXECUTE catalog.start_execution @execution_id = @execution_id

A couple of notes:

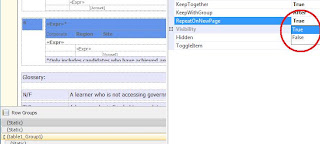

1. The package referenced by 'create_execution' has to have the 'Entry-point package' property set in Visual Studio:

2. There is an unused parameter in the above call to 'create_execution' - '@use32bitruntime' - use this on 64-bit environments if you want to force SSIS to execute in 32-bit mode.

This leaves two overall configuration options as I see it. You could either:

1. Have one SSIS 'environment' for every application you have. This means that the SSIS environment referenced at runtime would be the same across Dev, Test, Live and so on. Obviously the parameters themselves would be different!

2. Have all of your application/network environments (ie Dev, Test, Live) created in every instance of the SSIS Catalog and reference the appropriate one at runtime. This is obviously more flexible but probably carries more risk of running the wrong configuration on the wrong environment - with potentially serious consequences!