Interesting video here ('web cast' maybe? What's the difference?) about visualising data - focusing on tables, graphs and dashboards. If you don't know what a 'bullet graph', or a 'sparkline' is then this is for you. Most of it is non-product specific as well.

May sound a bit dry but this is one area of BI you can't ignore and can result in all of the behind-the-scenes effort going to waste.

Tuesday, 18 November 2008

Saturday, 1 November 2008

Child vs Parent Package Configurations contd..

Not so much a Part 2 (I will get round to it honest..) but a real-world example of the advantage of parent configurations. My current project involves migrating data for a major Norwegian bank, and needless to say we are using a parent package to set the configuration of the child packages actually doing the migration.

As often happens in IT projects we weren't in posession of all of the requirements before we started development. One of the requested changes was significant. The client wanted to use multiple target environments for each release. In other words, our migration server would need to migrate data into n target servers. However, we had baked the target connection string into a database table, which was read by the parent package, so we would have to update the database table every time we wanted to migrate to a different target. I felt this would be a cumbersome and risky operation at runtime. Not only could the connection string be incorrectly updated but we ran the risk of updating the wrong configuration or even wiping out all the configurations. On top of that, there was the possibility that the client might be making the changes themselves. Therefore I thought a change to our package config was required.

The option that seemed most sensible in the circumstances was to allow the target to be set at runtime on the command-line. We were using dtexec to run the migration anyway and using the /SET parameter you can set package properties including package variables (you should always set properties through variables as this insulates yourself against changes to packages).

What has all this got to do with child vs parent package configurations? Well, to make this change for us was simple - we only had to change the parent package and delete the configuration from the database table. If we had been configuring child packages directly we would have had to change every child package using that configuration. As we were doing lookups against the target server this would have been most of the child packages. As the project stands that would have been 3 packages - but had we decided to this in a months time it would probably be 6-8. With that many to do there would have been quite an impact on the project.

Therefore be aware that the most unlikely changes can be requested sometimes, and therefore its almost always best to try and anticipate these changes in your configuration design. Particularly when the costs of such a design are (in my view at least) relatively trivial.

As often happens in IT projects we weren't in posession of all of the requirements before we started development. One of the requested changes was significant. The client wanted to use multiple target environments for each release. In other words, our migration server would need to migrate data into n target servers. However, we had baked the target connection string into a database table, which was read by the parent package, so we would have to update the database table every time we wanted to migrate to a different target. I felt this would be a cumbersome and risky operation at runtime. Not only could the connection string be incorrectly updated but we ran the risk of updating the wrong configuration or even wiping out all the configurations. On top of that, there was the possibility that the client might be making the changes themselves. Therefore I thought a change to our package config was required.

The option that seemed most sensible in the circumstances was to allow the target to be set at runtime on the command-line. We were using dtexec to run the migration anyway and using the /SET parameter you can set package properties including package variables (you should always set properties through variables as this insulates yourself against changes to packages).

What has all this got to do with child vs parent package configurations? Well, to make this change for us was simple - we only had to change the parent package and delete the configuration from the database table. If we had been configuring child packages directly we would have had to change every child package using that configuration. As we were doing lookups against the target server this would have been most of the child packages. As the project stands that would have been 3 packages - but had we decided to this in a months time it would probably be 6-8. With that many to do there would have been quite an impact on the project.

Therefore be aware that the most unlikely changes can be requested sometimes, and therefore its almost always best to try and anticipate these changes in your configuration design. Particularly when the costs of such a design are (in my view at least) relatively trivial.

Saturday, 30 August 2008

Child vs Parent Package Configurations Part 1

One of my colleagues - Sam Loud - prompted a mention from the venerable Jamie Thompson on the thorny subject of SSIS configuration (in fact he starts with the problems with logging configuration - something I've also encountered, but the subject for another post I think). In fact we were debating it in the office that very day .

Sam's beef with parent package configurations is that it makes the development of child packages more onerous. This is due to the inability of child packages to use master configurations at run-time, unlike a direct configuration to a config file or database table which the child package can use. An example of this follows:

Firstly create a new SSIS project in BIDS/Visual Studio and create two packages - 'Parent' and 'Child':

We'll create a very simple child package to read the @@VERSION variable in SQL:

Add a connection manager to the package pointing to the master database on a convenient server (in this case my local machine):

Add an Execute SQL Task selecting the connection manager created above and type "SELECT @@VERSION" as the SQLStatement property:

Create a package variable with a 'Child' scope called 'ConnString', and set the connection manager to use the variable through the Property Expressions Editor:

You'll note at this point that you need to type the connection string into the variable to get the package to work. This of course is what Sam is complaining about having to do with Master configurations. However the advantage becomes clearer when adding a second child package.

Finally create a simple configuration using an XML file to set the ConnString variable value:

Executing the package should result in success. This is an example of a direct child configuration. Adding a second child package - Child2 - running through the same steps as for Child you can skip the step typing the connection string into the variable - simply add the variable and the configuration (remembering to click 'Reuse' when prompted). You should be able to execute Child2 successfully with the package picking the connection string up from the config file.

Now for the Parent configuration:

In the Parent package create a variable called 'ConnString' with Parent scope and use the XML configuration created for the Child.dtsx package. Create a File Connection Manager, selecting 'Existing file' in the drop-down and the file location of Child.dtsx (I'm not using MSDB for this example though this might be advisable in practice). Add a Execute Package task using the File Connection Manager created earlier.

Switch to the Child.dtsx package and remove the file configuration created earlier and create a 'Parent package variable' configuration adding 'ConnString' as the Parent variable:

You should be able to execute the Parent package and see BIDS switch to the Child.dtsx package and execute that in turn.

But what happens when you replace the file configuration in Child2.dtsx, with Parent package variable and try and execute it by itself? It will fail - as the ConnString variable in Child2.dtsx is blank and it will not 'look-up' the variable in Parent.dtsx. To get it to work you need to manually type the connection string into the ConnString variable. This is the crux of Sam's complaint. Using parent configuration means typing the connection string every time you create a package rather than just the once. There are associated disadvantages in that you can't test the development configuration until the parent package is run and so on.

The advantages of parent package configuration relate to larger, more complex projects:

1. Configuration is centralised and 'hidden' from child packages:

This makes changing the configuration (from database to environment variable for example)infinitely easier if you have a large number of packages. This might be an issue you can't control the environment your packages are running on, or you have multiple different possible environments to target.

2. Development amongst multiple developers without direct configuration is easier:

In my experience when developing packages in a team of 3 or more people direct configurations can become a pain. This is unless everyone has their machine set up exactly the same way. I've often found myself disabling the direct configurations in order to get a package to use a different database (local rather than server-based for example) or filepath to the one specified in the configuration. Using parent configurations leaves you as a developer free to set the variables as you please.

So in conclusion - because this post has gone on long enough - use child packages with direct configuration when the project is small, the environments you're using are well-defined and the number of packages are low - probably no more than 6-8. Otherwise the safest course of action is parent configuration.

In a future post I'll look at the possible solutions and/or work-arounds for working with parent configurations without typing configuration in for every package.

Sam's beef with parent package configurations is that it makes the development of child packages more onerous. This is due to the inability of child packages to use master configurations at run-time, unlike a direct configuration to a config file or database table which the child package can use. An example of this follows:

Firstly create a new SSIS project in BIDS/Visual Studio and create two packages - 'Parent' and 'Child':

We'll create a very simple child package to read the @@VERSION variable in SQL:

Add a connection manager to the package pointing to the master database on a convenient server (in this case my local machine):

Add an Execute SQL Task selecting the connection manager created above and type "SELECT @@VERSION" as the SQLStatement property:

Create a package variable with a 'Child' scope called 'ConnString', and set the connection manager to use the variable through the Property Expressions Editor:

You'll note at this point that you need to type the connection string into the variable to get the package to work. This of course is what Sam is complaining about having to do with Master configurations. However the advantage becomes clearer when adding a second child package.

Finally create a simple configuration using an XML file to set the ConnString variable value:

Executing the package should result in success. This is an example of a direct child configuration. Adding a second child package - Child2 - running through the same steps as for Child you can skip the step typing the connection string into the variable - simply add the variable and the configuration (remembering to click 'Reuse' when prompted). You should be able to execute Child2 successfully with the package picking the connection string up from the config file.

Now for the Parent configuration:

In the Parent package create a variable called 'ConnString' with Parent scope and use the XML configuration created for the Child.dtsx package. Create a File Connection Manager, selecting 'Existing file' in the drop-down and the file location of Child.dtsx (I'm not using MSDB for this example though this might be advisable in practice). Add a Execute Package task using the File Connection Manager created earlier.

Switch to the Child.dtsx package and remove the file configuration created earlier and create a 'Parent package variable' configuration adding 'ConnString' as the Parent variable:

You should be able to execute the Parent package and see BIDS switch to the Child.dtsx package and execute that in turn.

But what happens when you replace the file configuration in Child2.dtsx, with Parent package variable and try and execute it by itself? It will fail - as the ConnString variable in Child2.dtsx is blank and it will not 'look-up' the variable in Parent.dtsx. To get it to work you need to manually type the connection string into the ConnString variable. This is the crux of Sam's complaint. Using parent configuration means typing the connection string every time you create a package rather than just the once. There are associated disadvantages in that you can't test the development configuration until the parent package is run and so on.

The advantages of parent package configuration relate to larger, more complex projects:

1. Configuration is centralised and 'hidden' from child packages:

This makes changing the configuration (from database to environment variable for example)infinitely easier if you have a large number of packages. This might be an issue you can't control the environment your packages are running on, or you have multiple different possible environments to target.

2. Development amongst multiple developers without direct configuration is easier:

In my experience when developing packages in a team of 3 or more people direct configurations can become a pain. This is unless everyone has their machine set up exactly the same way. I've often found myself disabling the direct configurations in order to get a package to use a different database (local rather than server-based for example) or filepath to the one specified in the configuration. Using parent configurations leaves you as a developer free to set the variables as you please.

So in conclusion - because this post has gone on long enough - use child packages with direct configuration when the project is small, the environments you're using are well-defined and the number of packages are low - probably no more than 6-8. Otherwise the safest course of action is parent configuration.

In a future post I'll look at the possible solutions and/or work-arounds for working with parent configurations without typing configuration in for every package.

Thursday, 7 August 2008

SSIS and Oracle providers - another warning

As you may have gathered I'm working with Oracle and SSIS a lot at the moment - and here's another gotcha with it:

Colleagues finalising a release encountered this error when testing SSIS packages on a 64-bit version of Windows 2003 R2:

The upshot is that the Oracle provider can't cope with parentheses (ie brackets) in the filepath of programs connecting to Oracle using the provider. Yes - you read that right.

Those of you who know anything about Windows running on X64 architecture know that 32-bit applications are stored under a seperate programs folder ie:

Fine you might say, don't run it under a 32-bit context then. Unfortunately if you're having to extract data from Excel (as we are) you have to - there is no 64-bit provider for Excel. Happily there is a fix and a workaround. The bug fix is patch number 5059238 available from Oracles MetaLink site. However you need a 'Support Identifier' which we haven't got hold of yet. I'll edit this post if we (or when) we try this fix.

The work-around is simply to avoid using parentheses when calling executables. The way this is done is by using the old-school MS-DOS short-name for the file path. Hence

Colleagues finalising a release encountered this error when testing SSIS packages on a 64-bit version of Windows 2003 R2:

ORA-06413: Connection not openThis is discussed at length in this MSDN thread amongst others.

The upshot is that the Oracle provider can't cope with parentheses (ie brackets) in the filepath of programs connecting to Oracle using the provider. Yes - you read that right.

Those of you who know anything about Windows running on X64 architecture know that 32-bit applications are stored under a seperate programs folder ie:

c:\Program Files (x86)\The sorts of programs that live under this folder are Business Intelligence Development Studio (ie BIDS) which is 32-bit only, and the 32-bit version of DTExec.exe - which executes SSIS packages. So you can see the problem; any SSIS package that uses the Oracle provider and runs in a 32-bit context will error.

Fine you might say, don't run it under a 32-bit context then. Unfortunately if you're having to extract data from Excel (as we are) you have to - there is no 64-bit provider for Excel. Happily there is a fix and a workaround. The bug fix is patch number 5059238 available from Oracles MetaLink site. However you need a 'Support Identifier' which we haven't got hold of yet. I'll edit this post if we (or when) we try this fix.

The work-around is simply to avoid using parentheses when calling executables. The way this is done is by using the old-school MS-DOS short-name for the file path. Hence

c:\Program Files (x86)\Microsoft SQL Server\90\DTS\Binn\DTExec.exebecomes

C:\Progra~2\Microsoft SQL Server\90\DTS\Binn\DTExec.exeThis avoids having a parenthesis in the file-path and therefore the Oracle provider won't error. In fact you can launch BIDS/Visual Studio from the command-line in a similar way - so you can check the connection managers work:

C:\Progra~2\Microsoft Visual Studio 8\Common7\IDE\devenv.exeSo to top this post off here is an example of a command-line that will run an SSIS package using the Oracle provider under a 32-bit context on a 64-bit server:

"C:\Progra~2\Microsoft SQL Server\90\DTS\Binn\DTExec.exe” /DTS "\MSDB\[Package Name]" /SERVER [Server Name]

Tuesday, 5 August 2008

More on Oracle connectors

A useful wiki here containing a plethora of info on SSIS connectivitity. There is a table here listing the different Oracle Providers. This also includes the points I made in my previous post. Since it appears under my post on a Google search for 'Oracle providers' I thought I'd try and redress the balance a little...

Monday, 28 July 2008

Oracle SSIS providers - careful!

Been pretty busy over the last couple of months (hence the hiatus in posting), but I've built up plenty of useful material to post about in the next few weeks (time permitting!).

Whats in a name? Well quite a lot when it comes to loading data into Oracle using SSIS. The details are described here but it boils down to using "Oracle Provider for OLE DB" rather than "Microsoft OLE DB Provider for Oracle".

The difference? Well as the article states:

Bear in mind however you need to install the Oracle client to get the Oracle Provider for OLE DB which is here.

Whats in a name? Well quite a lot when it comes to loading data into Oracle using SSIS. The details are described here but it boils down to using "Oracle Provider for OLE DB" rather than "Microsoft OLE DB Provider for Oracle".

The difference? Well as the article states:

[Microsoft OLE DB Provider for Oracle] has not been enhanced since Oracle 8Therefore it doesn't support certain data types and 64-bit for starters.

Bear in mind however you need to install the Oracle client to get the Oracle Provider for OLE DB which is here.

Saturday, 19 April 2008

Multi-user SSIS environment? Vote now!

In a post here Jamie Thompson suggests extending the container object in SSIS to allow for code re-use and crucially allow more than one developer to work on one package at a time. This would be a big help on large projects. He's put in a Connect request which currently has 9 votes. If you want to see this feature in the next version of SQL vote now!

Personally I'd like to see better branching and merging support so that its easier to compare packages. There is Smart Diff, part of BIDS Helper which is better but it's:

a) an add-on

b) still needs a Source Control client

c) still forces you to do manual XML merges

One can but hope...

Personally I'd like to see better branching and merging support so that its easier to compare packages. There is Smart Diff, part of BIDS Helper which is better but it's:

a) an add-on

b) still needs a Source Control client

c) still forces you to do manual XML merges

One can but hope...

Thursday, 3 April 2008

PerformancePoint Monitoring Data Source Connection Problems - Solution for XP

Long title for a post! Over the last day or two I've been getting to grips with PerformancePoint 2007 , in particular the Monitoring Server product - including KPI's, scorecards, dashboards and so on. Be warned - there are some prerequisites including ASP.NET AJAX extensions which aren't mentioned on the PerformancePoint website.

Long story short, I came up against the problem detailed in full by Nick Barclay here. If you're running Vista or Windows 2003 you should read that post for the solution. However was I using XP.

Briefly, the problem was that creating a Data Source in the Dashboard Designer results in the following message:

The reason for the message is that PerformancePoint is written in ASP.NET and as such, under IIS 6 it uses several Application Pools. Its the account that these app pools run under that is used to connect to a Data Source. Unfortunately XP uses IIS 5.1 so there's no app pools and therefore Nick's solution doesn't work. However there is a solution that I stumbled across.

On IIS 5 ASP.NET websites run under the ASPNET account. However, changing the priviliges (ie adding to the Administrators group) is bad practice and more fundamentally won't work. The solution is to give the ASPNET account priviliges on the target Data Source. This is easy enough for SQL Server - simply add the ASPNET account as a user and give it access to the relevant database. The Analysis Services solution is slightly more involved.

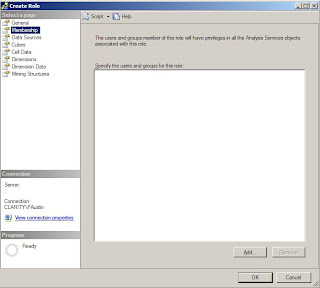

Basically you need to add a Role to the Analysis Services cube and add the ASPNET account as a member. Here's some screenshots showing the process in SSMS.

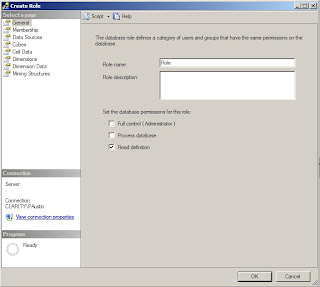

Connect to your cube and navigate to the 'Role' folder and right-click on it. Choose 'New Role...':

On the Create Role dialogue click the 'Full Control (Administrator)' box, and give it an appropriate name (for the purposes of this example we'll leave it as 'Role'):

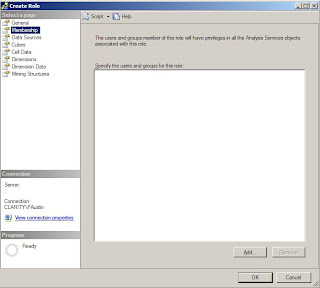

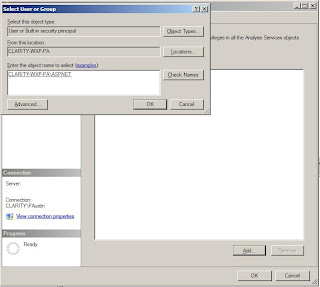

Click the 'Membership' property in the right-hand pane. Then click the 'Add...' button:

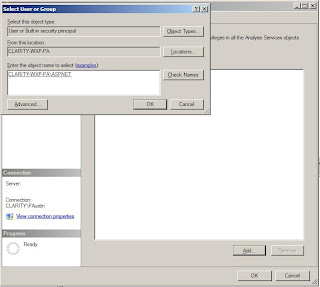

Type ASPNET as the object name and click 'Check Names' - it should underline it:

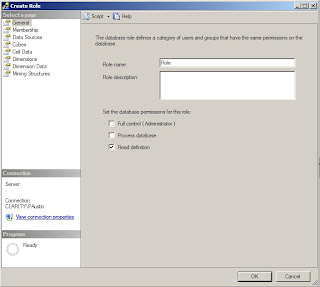

Click OK, and OK again on the 'Create Role' dialogue. Fire up the Dashboard Designer and you should find the Data Source connects fine.

Long story short, I came up against the problem detailed in full by Nick Barclay here. If you're running Vista or Windows 2003 you should read that post for the solution. However was I using XP.

Briefly, the problem was that creating a Data Source in the Dashboard Designer results in the following message:

"The PerformancePoint Server could not connect to the specified data source. Verify that either the current user or application pool user has Read permissions to the data source, depending on your security configuration. Also verify that all required connection information is provided and correct"

The reason for the message is that PerformancePoint is written in ASP.NET and as such, under IIS 6 it uses several Application Pools. Its the account that these app pools run under that is used to connect to a Data Source. Unfortunately XP uses IIS 5.1 so there's no app pools and therefore Nick's solution doesn't work. However there is a solution that I stumbled across.

On IIS 5 ASP.NET websites run under the ASPNET account. However, changing the priviliges (ie adding to the Administrators group) is bad practice and more fundamentally won't work. The solution is to give the ASPNET account priviliges on the target Data Source. This is easy enough for SQL Server - simply add the ASPNET account as a user and give it access to the relevant database. The Analysis Services solution is slightly more involved.

Basically you need to add a Role to the Analysis Services cube and add the ASPNET account as a member. Here's some screenshots showing the process in SSMS.

Connect to your cube and navigate to the 'Role' folder and right-click on it. Choose 'New Role...':

On the Create Role dialogue click the 'Full Control (Administrator)' box, and give it an appropriate name (for the purposes of this example we'll leave it as 'Role'):

Click the 'Membership' property in the right-hand pane. Then click the 'Add...' button:

Type ASPNET as the object name and click 'Check Names' - it should underline it:

Click OK, and OK again on the 'Create Role' dialogue. Fire up the Dashboard Designer and you should find the Data Source connects fine.

Friday, 7 March 2008

Details of Windows 2008/SQL 2008 performance emerging

Some pretty stellar performance being recorded for SQL 2008 on Windows 2008 on the SQL Server Performance Blog. Record ETL performance of over 2TB an hour! As they say themselves - not a certified benchmark but impressive nonetheless, and the sort of performance you'd expect of a data appliance and/or Informatica, Ab Initio et al. White paper to follow which should be an interesting read.

Some benchmarks that are certified on the other hand are the Transaction Processing Council (TPC). HP have posted the first 10TB TPC-H benchmark for Windows 2008/SQL Server 2008, achieving over 1000 queries per minute at less than $40 per query. Oracle and DB2 still do better at this scale - both in terms of raw performance and price/performance but SQL is catching up with every release. It'll be interesting to see the 3TB results because there wasn't much in it under SQL 2005.

Does any of this matter? Well yes and no. Clearly you're not going to embark on a 10TB data warehouse with only a handful of TPC benchmarks to guide you, but whereas previously you might not consider SQL Server for this scale I think its becoming more viable. Nationwide's Basel II data warehouse was predicted to get to that size a couple of years a ago and that was running on SQL 2005 and Windows 2003.

Some benchmarks that are certified on the other hand are the Transaction Processing Council (TPC). HP have posted the first 10TB TPC-H benchmark for Windows 2008/SQL Server 2008, achieving over 1000 queries per minute at less than $40 per query. Oracle and DB2 still do better at this scale - both in terms of raw performance and price/performance but SQL is catching up with every release. It'll be interesting to see the 3TB results because there wasn't much in it under SQL 2005.

Does any of this matter? Well yes and no. Clearly you're not going to embark on a 10TB data warehouse with only a handful of TPC benchmarks to guide you, but whereas previously you might not consider SQL Server for this scale I think its becoming more viable. Nationwide's Basel II data warehouse was predicted to get to that size a couple of years a ago and that was running on SQL 2005 and Windows 2003.

Friday, 29 February 2008

OLAP report BI survey released

The venerable OLAP Report has released the seventh annual BI survey for 2007. The report is reviewed here by Intelligent Enterprise. Not had the opportunity to read it - it's not exactly cheap at $4 995 for three users, hopefully Clarity will see fit to get the credit card out.

Some headlines however; customer 'loyalty' scores increased for Microstrategy, Applix and Microsoft Analysis Services and declined for Hyperion Essbase, Cognos and SAP.

Overall satisfaction with BI is high - 71% of survey respondents reporting that business goals had 'largely' been met.

On the flip-side less than 9% of employees use BI with cost and performance being the biggest obstacles to a wider deployment. 'BI for the masses' not exactly here yet...

Some headlines however; customer 'loyalty' scores increased for Microstrategy, Applix and Microsoft Analysis Services and declined for Hyperion Essbase, Cognos and SAP.

Overall satisfaction with BI is high - 71% of survey respondents reporting that business goals had 'largely' been met.

On the flip-side less than 9% of employees use BI with cost and performance being the biggest obstacles to a wider deployment. 'BI for the masses' not exactly here yet...

Thursday, 7 February 2008

Restating history or "What was the number on this date?"

Interesting article from Joy Mundy here about meeting the need to 'restate' history or do point-in-time analysis from a data warehouse. It involves joining on a business key which might raise a few eyebrows. Not sure how you'd go about doing this in an OLAP tool - a difficutly she highlights herself.

In a recent project I solved a similar problem by loading a snapshot of all relevant facts every day, and doing distinct counts in Analysis Services. A 'snapshot date' dimension allowed for analysis on any given date. This approach worked well because the data-sets were small (only amounting to 2000 rows a day) - Joy's might be a more elegant solution if you have millions of rows to contend with.

In a recent project I solved a similar problem by loading a snapshot of all relevant facts every day, and doing distinct counts in Analysis Services. A 'snapshot date' dimension allowed for analysis on any given date. This approach worked well because the data-sets were small (only amounting to 2000 rows a day) - Joy's might be a more elegant solution if you have millions of rows to contend with.

Monday, 4 February 2008

Conferences

Lots of SQL and BI events occuring over the next few months.

First-up is the Gartner Euro BI conference at which there will be a Clarity-Integration stand and some of my colleagues. Not me though unfortunately...

Second is the SQLBits II - The SQL in Birmingham next month. Puns aside its shaping up pretty well with sessions on 2008 as well as some in-depth 2005 sessions with MVPs. Only catch is that its on a Saturday. What gives with that?

Thirdly PASS Europe in April in Germany - not a lot of detail on this at the moment - although a couple of MVP's are doing sessions.

Last but certainly not least is the MS BI conference in Seattle in October. Went to this last year and it was great, with some informative and useful sessions - particularly from SQL CAT - oh and a free trip up the Space Needle courtesy of Panorama wasn't bad either...

First-up is the Gartner Euro BI conference at which there will be a Clarity-Integration stand and some of my colleagues. Not me though unfortunately...

Second is the SQLBits II - The SQL in Birmingham next month. Puns aside its shaping up pretty well with sessions on 2008 as well as some in-depth 2005 sessions with MVPs. Only catch is that its on a Saturday. What gives with that?

Thirdly PASS Europe in April in Germany - not a lot of detail on this at the moment - although a couple of MVP's are doing sessions.

Last but certainly not least is the MS BI conference in Seattle in October. Went to this last year and it was great, with some informative and useful sessions - particularly from SQL CAT - oh and a free trip up the Space Needle courtesy of Panorama wasn't bad either...

More useful blogs

Sometimes it amazes me how much good stuff people in the SQL community are blogging about. I for one have difficulty keeping up with it all. Stumbled across this by Michael Entin none other than the Tech Lead for SSIS - not someone I'd heard of I'm ashamed to say. Anyhoo lots of informative posts particularly about SQL 2008, including one about the improvement in multi-threading execution trees and another about compatability between VS2008/SQL2008 and VS2005/SQL2005 (note - there are limitations).

I found another detailed and interesting post here about Change Data Capture which could be really big news for BI on SQL2008 providing that a) your source system will be migrated onto 2008, and b) you can persuade your source system DBA's to switch it on. One of the major issues frequently encountered working with large source systems is figuring out the delta - ie whats changed since you last did an extract. Change Data Capture (CDC) could be a neat solution. Time to start bending your fellow DBA's ears now..

I found another detailed and interesting post here about Change Data Capture which could be really big news for BI on SQL2008 providing that a) your source system will be migrated onto 2008, and b) you can persuade your source system DBA's to switch it on. One of the major issues frequently encountered working with large source systems is figuring out the delta - ie whats changed since you last did an extract. Change Data Capture (CDC) could be a neat solution. Time to start bending your fellow DBA's ears now..

Friday, 25 January 2008

SSIS Expression for YYYYMMDD (ISO date)

Scratching around this afternoon trying to find a crib for the SSIS expression for the date format YYYYMMDD (ie 20080125 for today). Darren's Wiki site has it here but it appeared to be having a few problems and the page was timing out (seems to working now). So I'm sticking it here should I need it again (and I probably will):

Update: Thanks to Anonymous below who pointed out that the code-page ought to be 1252 (rather than 1253) since this is usually the default for most SQL installs (not that 1253 will break it). As you can see I've updated it. Also if you want a Unicode version you can do this:

(DT_STR,4,1252)YEAR(GETDATE()) + RIGHT("0" + (DT_STR,2,1252)MONTH(GETDATE()),2) + RIGHT("0" + (DT_STR,2,1252)DAY(GETDATE()),2)

Update: Thanks to Anonymous below who pointed out that the code-page ought to be 1252 (rather than 1253) since this is usually the default for most SQL installs (not that 1253 will break it). As you can see I've updated it. Also if you want a Unicode version you can do this:

(DT_WSTR,4)YEAR(GETDATE()) + RIGHT("0" + (DT_WSTR,2)MONTH(GETDATE()),2) + RIGHT("0" + (DT_WSTR,2)DAY(GETDATE()),2)

Subscribe to:

Comments (Atom)